December 26, 2023 | SNAK Consultancy

Share on :

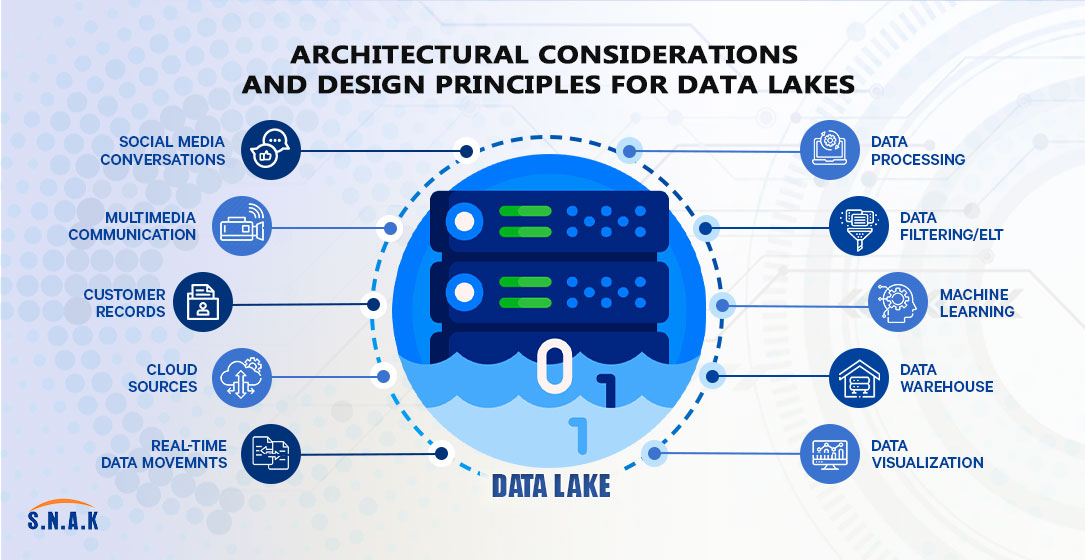

Architectural considerations and design principles for data lakes.

In the ever-evolving landscape of data management, organizations are turning to data lakes as a powerful solution for storing and analyzing vast amounts of diverse data. A well-architected data lake not only acts as a repository but also serves as a foundation for advanced analytics, machine learning, and business intelligence. In this blog, we delve into the architectural considerations and design principles that lay the groundwork for a robust and scalable data lake.

Scalability and Flexibility:

Designing a data lake with scalability in mind is essential to accommodate the growing volume and variety of data. Cloud-based solutions, such as AWS S3 or Azure Data Lake Storage, provide the elasticity needed for seamless scalability. Moreover, a flexible architecture enables the incorporation of new data sources without significant disruptions to the existing infrastructure.

Data Organization and Schema-on-Read:

Unlike traditional databases with a schema-on-write approach, data lakes often adopt a schema-on-read strategy. This means that raw data is ingested without a predefined structure, allowing for greater flexibility during the exploration and analysis phases. Effective data organization, through the use of metadata and folder structures, enhances discoverability and accessibility.

Security and Compliance:

Security is paramount in any data management strategy, and data lakes are no exception. Implement robust access controls, encryption mechanisms, and auditing features to safeguard sensitive information. Compliance with industry regulations, such as GDPR or HIPAA, should be a top priority, influencing the architecture to meet specific legal requirements.

Metadata Management:

Metadata serves as the backbone of a well-architected data lake. Detailed metadata, including data lineage, quality, and context, enhances data governance and simplifies the process of data discovery. Invest in metadata management tools and practices to maintain a comprehensive and up-to-date catalog.

Data Ingestion Strategies:

Choose the right data ingestion strategies based on the nature of your data and business requirements. Batch processing, streaming, and change data capture (CDC) mechanisms can be combined to ensure timely and accurate data updates. Employing scalable ingestion tools, such as Apache NiFi or AWS Glue, facilitates seamless data flow into the lake.

Integration with Big Data Processing Frameworks:

To derive meaningful insights from the stored data, integrate data lakes with big data processing frameworks like Apache Spark or Apache Flink. This integration allows for parallel processing and distributed computing, enabling efficient analytics on large datasets.

Monitoring and Performance Optimization:

Continuous monitoring is crucial for identifying bottlenecks, ensuring optimal performance, and proactively addressing issues. Implement logging, monitoring, and alerting mechanisms to maintain the health of your data lake. Consider optimizing performance through techniques like data partitioning, indexing, and caching.

Questionnaire

Ques.1 How does the scalability of a data lake contribute to its effectiveness in handling diverse and growing datasets?

Ans. The scalability of a data lake ensures efficient handling of diverse and growing datasets by accommodating increased volume, supporting various data types, enabling parallel processing, and adapting to evolving business needs. This agility ensures optimal performance and cost-effective scalability as data demands evolve.

Ques.2 Why is the integration with big data processing frameworks essential for deriving meaningful insights from a data lake?

Ans. Integration with big data processing frameworks is crucial for meaningful insights from a data lake. These frameworks, like Apache Spark, enable distributed computing, parallel processing, and efficient analytics, ensuring the processing power needed to extract valuable knowledge from vast and complex datasets.

Ques.3 In what ways does a schema-on-read approach benefit organizations dealing with unstructured or semi-structured data?

Ans. A schema-on-read approach benefits organizations dealing with unstructured or semi-structured data by allowing flexibility in data interpretation. It enables data exploration without predefined structures, facilitating easier ingestion, exploration, and analysis of diverse data types within a data lake.

Conclusion :

Architecting a data lake is a multifaceted endeavor that requires a strategic blend of scalability, security, and flexibility. By incorporating these architectural considerations and design principles, organizations can establish a resilient foundation that not only meets current data management needs but also adapts to the evolving landscape of data analytics and business intelligence. As data continues to play a pivotal role in decision-making, a well-architected data lake becomes a cornerstone for innovation and success.